MOA has been extended in order to provide an interface to develop and visualize online recommender algorithms.

This is a simple example in order to show the functionality of the EvaluateOnlineRecommender task in MOA.

This task takes a rating predictor and a dataset (each training instance being a [user, item, rating] triplet) and evaluates how well the model predicts the ratings, given the user and item, as more and more instances are processed. This is similar to an online scenario of a recommender system, where new ratings from users to items arrive constantly, and the system has to make predictions of unrated items for the user in order to know which ones to recommend.

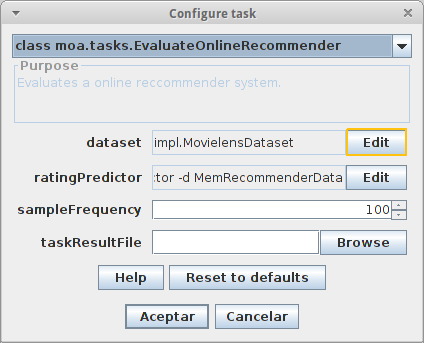

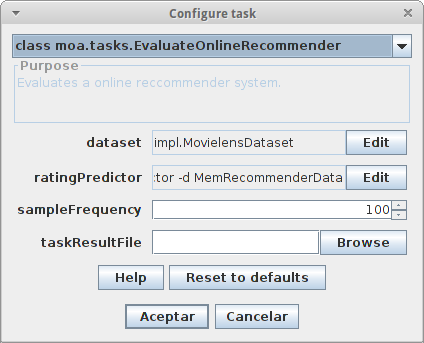

Let’s start by opening the MOA user interface. In the Classification tab, click on Configure task, and select from the list the ‘class moa.tasks.EvaluateOnlineRecommender’.

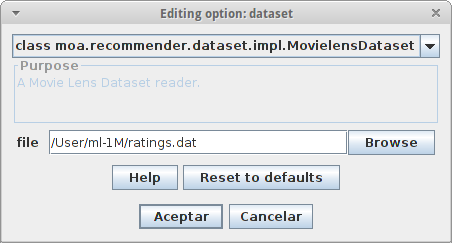

Now we need to select which dataset we want to process, so we click the corresponding button to edit that option.

On the list, we can choose different publicly available datasets. For this example, we will be using the Movielens 1M dataset. We can download it from https://grouplens.org/datasets/movielens/. Finally, we select the file where the input data is located.

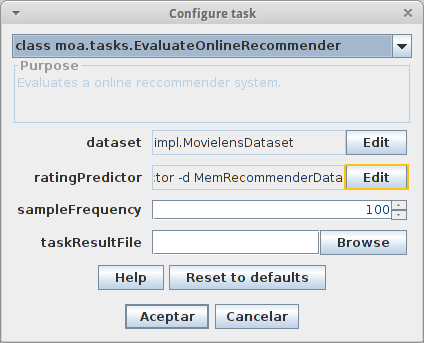

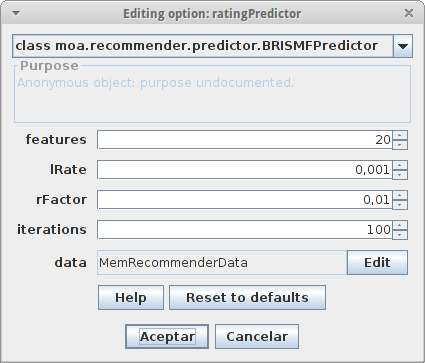

Once the dataset is configured, the next step is to choose which ratingPredictor to evaluate.

For the moment, there are just two available: BaselinePredictor and BRISMFPredictor. The first is a very simple rating predictor, and the second is an implementation of a factorization algorithm described in Scalable Collaborative Filtering Approaches for Large Recommender Systems (Gábor Takács, István Pilászy, Bottyán Németh, and Domonkos Tikk). We choose the latter,

and find the following parameters:

- features – the number of features to be trained for each user and item

- learning rate – the learning rate of the gradient descent algorithm

- regularization ratio – the regularization ratio to be used in the tikhonov regularization

- iterations – the number of iterations to be used when retraining user and item features (online training).

We can leave the default parameters for this dataset.

Going back to the configuration of the task,

we have the sampleFrequency parameter, which defines the frequency in which the precision measures are taken. And finally, the taskResultFile which allows us to save the output of the task in a file. We can leave the default values for them.

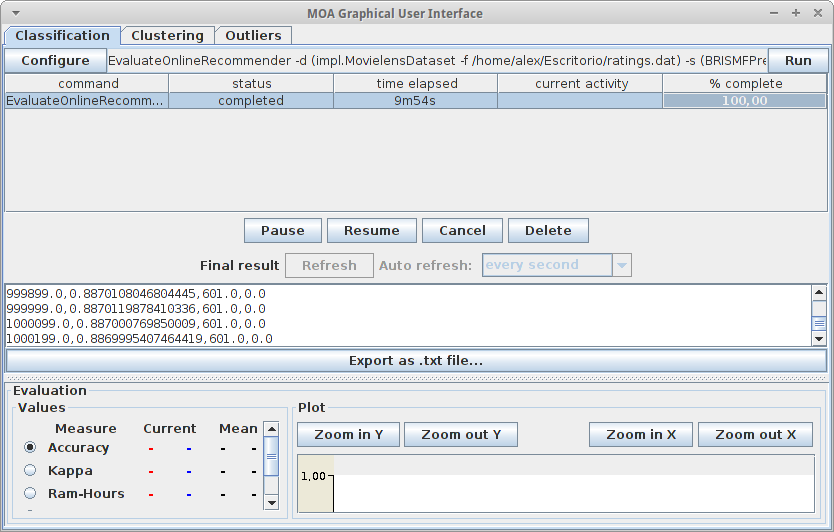

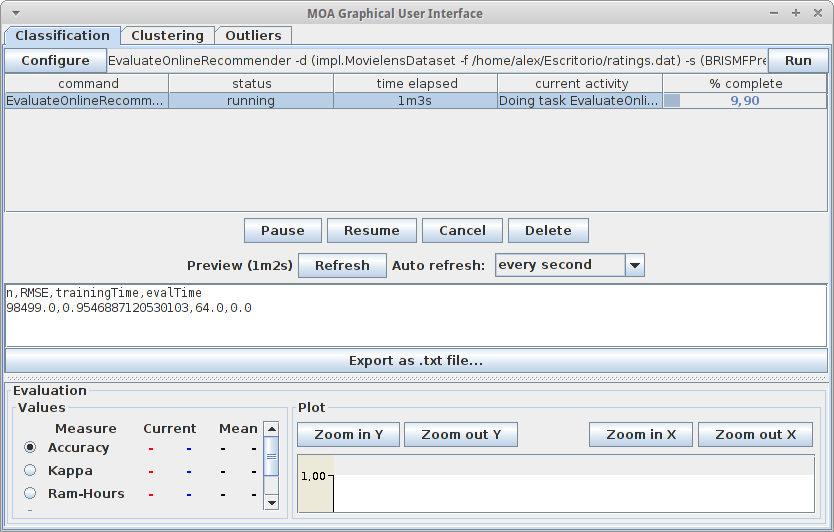

Now the task is configured, and we only have to run it:

As the task progresses, we can see in the preview box the RMSE of the predictor from the instance 1 to the processed so far.

When the task finishes, we can see the final results, the RMSE error of the predictor at each measured point.