Active Learning

MOA now supports the analysis of active learning (AL) classifiers. Active learning is a subfield of machine learning, in which the classifier actively selects the instances it uses in its training process. This is relevant in areas, where obtaining the label of an unlabeled instance is expensive or time-consuming, e.g. requiring human interaction. AL generally reduces the amount of training instances needed to reach a certain performance compared to training without AL, thus reducing costs. A great overview on active learning is given by Burr Settles[1].

In stream-based (or sequential) active learning, one instance at a time is presented to the classifier. The active learner needs to decide whether it requests the instance’s label and uses it for training. Usually, the learner is limited by a given budget that defines the share of labels that can be requested.

This update to MOA introduces a new tab for active learning. It provides several extensions to the graphical user interface and already contains multiple AL strategies.

Graphical User Interface Extensions

The tab’s graphical interface is based on the Classification tab, but it has been improved by some additional functionality:

Result Table

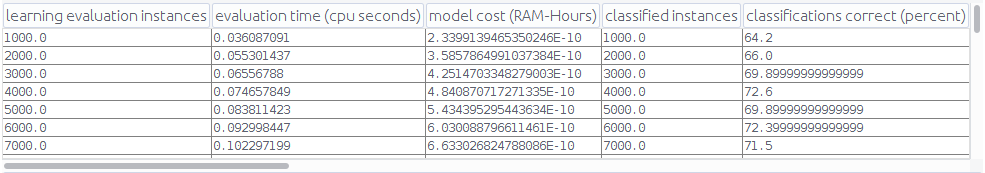

The result preview has been updated from a simple text field showing CSV data to an actual table:

Hierarchy of Tasks

In order to enable convenient and fast evaluation that provides reliable results, we introduced new tasks with a special hierarchy:

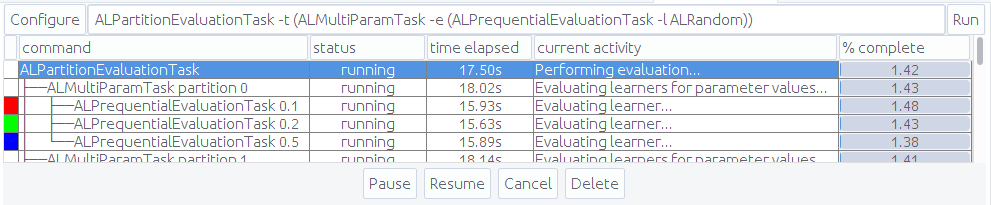

ALPrequentialEvaluationTask: Perform prequential evaluation for any chosen active learner.ALMultiParamTask: Compare different parameter settings for the same algorithm by performing multipleALPrequentialEvaluationTasks. The parameter to be varied can be selected freely from all parameters that are available.ALPartitionEvaluationTask: Split the data stream into several partitions and perform anALMultiParamTaskon each one. This allows for cross-validation-like evaluation.

The tree structure of those tasks and their main parameters are now also indicated in the task overview panel on top of the window:

The assigned colors allow for identifying the different tasks in the graphical evaluation previews beneath the task overview panel.

The class MetaMainTask in moa.tasks.meta handles the general management of hierarchical tasks. It may be useful for other future applications.

Evaluation

The introduced task hierarchy requires an extended evaluation scheme. Each type of task has its own evaluation style:

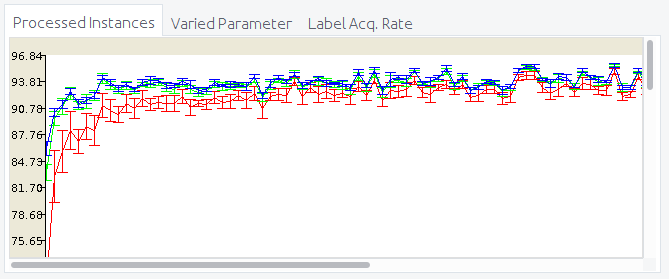

ALPrequentialEvaluationTask: Results for one single experiment are shown. The graphical evaluation is equivalent to the evaluation of traditional classifiers.ALMultiParamTask: Results for the different parameter configurations are shown simultaneously in the same graph. Each configuration is indicated by a different color.ALPartitionEvaluationTask: For each parameter configuration, mean and standard deviation values calculated over all partitions are shown (see the following image).

For ALMultiParamTasks and ALPartitionEvaluationTasks there are two additional types of evaluation:

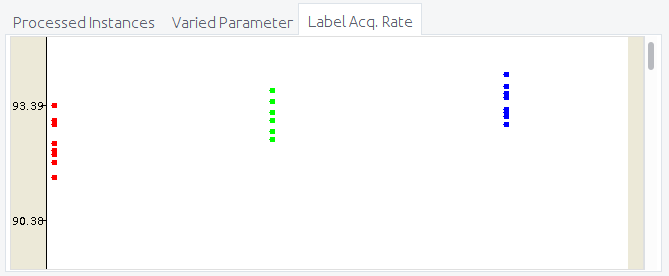

Any selected measure can be inspected in relation to the value of the varied parameter. The following image shows an example with the parameter values 0.1, 0.5 and 0.9 (X-axis) and the respective mean and standard deviation values of the selected measure (Y-axis).

The same can be done with regard to the rate of acquired labels (also called budget), as this measure is very important in active learning applications.

Algorithms

This update includes two main active learning strategies:

ALRandom: Decides randomly, if an instance should be used for training or not.ALUncertainty: Contains four active learning strategies that are based on uncertainty and explicitly handle concept drift (see Zliobaite et al.[2], Cesa-Bianchi et al.[3]).

Writing a Custom Active Learner

MOA makes it easy to write your own classifier and evaluate it. This is no different for active learning. Any AL classifier written for MOA is supposed to implement the new interface ALClassifier located in the package moa.classifiers.active, which is a simple extension to the Classifier interface. It adds a method getLastLabelAcqReport(), which returns True if the previously presented instance was added to the training set of the AL classifier and allows for monitoring the number of acquired labels.

For a general introduction into writing you own classifier in MOA, see this tutorial.

Remark

This update was developed at the Otto-von-Guericke-University Magdeburg, Germany, by Tuan Pham Minh, Tim Sabsch and Cornelius Styp von Rekowski, and was supervised by Daniel Kottke and Georg Krempl.

- Settles, Burr. “Active learning.” Synthesis Lectures on Artificial Intelligence and Machine Learning 6.1 (2012): 1-114. ↩︎

- Žliobaitė, Indrė, et al. “Active learning with evolving streaming data.” Joint European Conference on Machine Learning and Knowledge Discovery in Databases. Springer, Berlin, Heidelberg, (2011). ↩︎

- Cesa-Bianchi, Nicolo, Claudio Gentile, and Luca Zaniboni. “Worst-case analysis of selective sampling for linear classification.” Journal of Machine Learning Research 7.Jul (2006): 1205-1230. ↩︎